With Olivier TESQUET (@oliviertesquet), journalist specialising in new technologies at Télérama (@Telerama), and Nathalie VANDYSTADT, coordinator of communication and external relations at the European Artificial Intelligence Office.

Moderated by Philippe LALOUX (@philaloux), economic journalist for the newspaper Le Soir (@lesoir).

Key issues

Who innovates and who regulates AI in Europe? Artificial intelligence has now become an integral part of Europeans’ digital practices and is making its way into more and more fields. American companies lead the European market, maintaining a monopoly that seems unchallenged, even if some startups are emerging on the old continent. In the face of these digital upheavals, the European Union aims to regulate AI and warn about the risks it poses to citizens. To this end, the European Commission created the European Artificial Intelligence Office, which spearheaded a text outlining the potential dangers of AI use: the AI Act.

What they said:

Olivier Tesquet : “The AI market in Europe is monopolised by Big Tech. They either develop software internally or buy companies, like Microsoft did with OpenAI.”

“The cost of entering this market is so high that it leaves little room for smaller players, who often have a more ethical vision of AI.”

“Europe’s issue lies in its inability to assert itself in the tech industry. However, it has succeeded in one area: being a normative power, excelling at creating rules.”

“The danger for journalism is that AI fosters a form of automation, with a desire to replace journalists or editorial functions, including translation.”

Nathalie Vandystadt : “I followed the negotiation of the AI Act […]. Initially, it was focused on the internal market. It was a very long negotiation. During this period, many things changed, particularly with ChatGPT.”

“AI is two things: a lot of data and computing power. Over the past decade, these two factors have enabled significant advancements.”

“As for generative AI, we’re not going to stifle innovation. In Europe, we don’t have giants but rather startups. They will be subject to rules, but we will also support them. Regulation goes hand in hand with innovation. We shouldn’t put them against each other.”

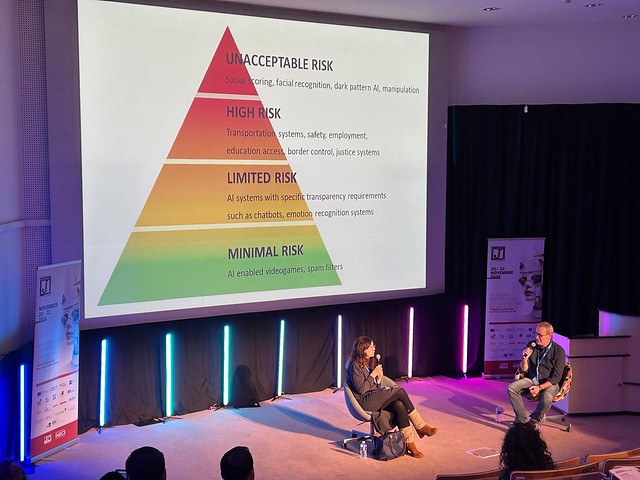

The AI Act includes four degrees of risk. Photo: Camille COMBRET/EJC

Takeaways

While Europe does not shine through its ability to dominate the AI innovation market, it stands out for its normative role. The AI Act, initiated by the European Artificial Intelligence Office, seeks to regulate practices and prevent abuses related to AI usage. This text identifies four types of risks. Minimal risks, which include video games or spam, for example. Limited risks, which address issues such as chatbots or emotional recognition. High risks, involving key areas like education, transportation, and employment. Unacceptable risks, which prohibit systems such as social scoring, seen in the USA or China, or systems that could prevent access to loans or university placements. The AI Act is currently being implemented. In February, companies will no longer be able to publish articles that are prohibited by the text. A code of practice, on the other hand, will come into effect in August. This is significant work, as the office must consider all market players.

Léo SEGURA (EPJT)